Two opposing AI strategies in China

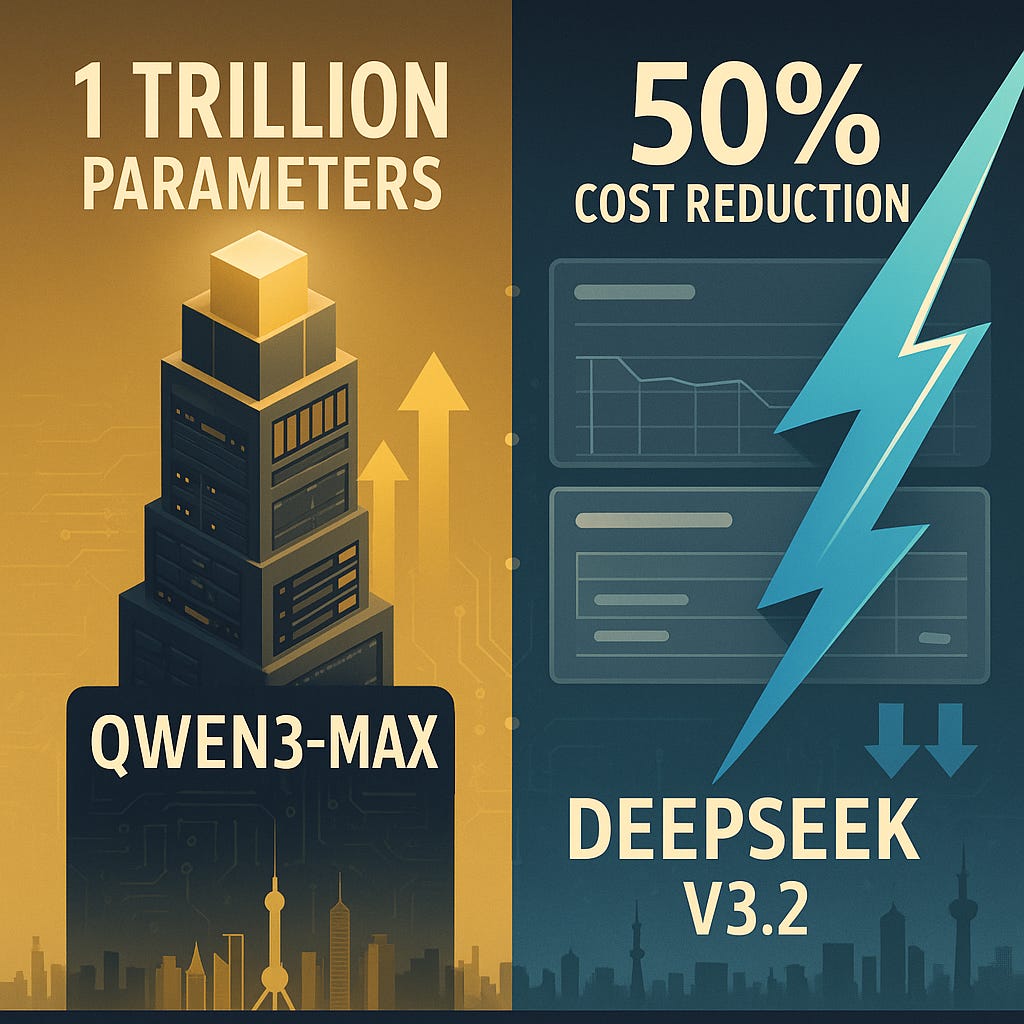

Alibaba’s trillion-parameter Qwen3-Max battles DeepSeek’s 50% cost cuts as competing strategies reshape market dynamics

Qwen3-Max achieved third place globally on LMArena with over 1 trillion parameters, scoring 69.6 on SWE-Bench Verified and surpassing certain GPT-5

DeepSeek cut API prices by over 50% with V3.2-Exp, reducing output costs from ¥12 to ¥3 per million tokens

Price wars intensified across Chinese tech giants: Alibaba slashed Qwen-Long pricing by 97% in May 2024; Baidu made Ernie models free; ByteDance reduced Doubao costs

Qwen3-Max costs ¥43.2 per million output tokens ($6.40), targeting enterprises willing to pay premiums for peak performance, whilst DeepSeek serves cost-sensitive developers at a fraction of the price.

China’s artificial intelligence industry has fractured along two opposing trajectories. Giants pursue trillion-parameter scale. Startups weaponise algorithmic efficiency. The collision reshapes the economics of machine intelligence.

Alibaba released Qwen3-Max in September 2025, a model exceeding 1 trillion parameters trained on 36 trillion tokens. It ranks third on LMArena’s text leaderboard. This places Chinese technology alongside OpenAI and Anthropic at the frontier for the first time.

DeepSeek unveiled V3.2-Exp almost simultaneously, cutting API pricing by over 50% to $0.028 per million input tokens. The reduction stems from architectural breakthroughs, not cash subsidies. Performance matches its predecessor whilst inference costs halve.

Giants bet on scale, startups on efficiency

Qwen3-Max represents China’s most ambitious parameter expansion. The model uses Mixture-of-Experts architecture, activating approximately 500 billion parameters per token from its trillion-parameter pool.

Performance impresses.

It scored 69.6 on SWE-Bench Verified, demonstrating practical software development capabilities. On Tau2-Bench agent testing, it exceeded Claude Opus 4. The reasoning variant achieved perfect scores on AIME 25 and HMMT mathematical benchmarks during training.

Alibaba invested heavily in this “triple-A game” approach, gaming industry parlance for blockbuster productions requiring massive budgets. Only giants command resources for trillion-parameter training runs. The strategy creates quality barriers competitors struggle to cross.

Pricing reflects premium positioning.

Output tokens cost ¥43.2 ($6.40) per million. Input pricing reaches ¥8.64 per million tokens for the 0-32K context tier. This targets enterprises requiring absolute performance for high-value scenarios: financial analysis, complex code generation, mission-critical reasoning.

The scale bet carries strategic imperatives beyond immediate revenue. Cloud providers need flagship models as trust signals when competing for enterprise contracts. A “global third place” ranking provides credibility worth more than API margins.

Yet this creates vulnerability. When startups like DeepSeek achieve comparable performance at half the cost, the premium becomes harder to justify.

Efficiency innovation undermines scale economics

DeepSeek’s V3.2-Exp challenges the scale orthodoxy through algorithmic breakthrough. Its DeepSeek Sparse Attention (DSA) architecture achieves fine-grained attention with minimal quality impact whilst dramatically reducing compute costs.

Traditional Transformer attention mechanisms calculate interactions between every token pair—O(n²) complexity that creates catastrophic cost scaling for long contexts. A 128K token context window consumes exponentially more compute than shorter sequences.

DSA employs a two-stage process: a “lightning indexer” identifies relevant context portions, then a “fine-grained selector” chooses specific tokens for detailed attention calculations. This reduces tokens processed per layer significantly.

The economic transformation is striking.

V3.2-Exp requires less than half the cost per million tokens of V3.1-Terminus for long contexts. Yet performance metrics remain stable: MMLU-Pro holds at 85.0, AIME 2025 improves to 89.3, Codeforces ratings rise from 2,046 to 2,121.

The model trades marginal abstract reasoning capability for substantial cost reduction on practical tasks; a bargain most developers accept gladly.

DeepSeek’s 671 billion total parameters with just 37 billion activated demonstrates that architectural innovation unlocks performance without proportional parameter growth. This directly challenges the Scaling Law orthodoxy dominant since GPT-3.

The startup released model weights under MIT License. Open access accelerates adoption whilst educating markets about efficiency-first alternatives to proprietary giants. It also creates strategic vulnerability: any competitor can replicate the innovation.

Price wars destroy margins across the industry

The efficiency breakthrough triggered panic among giants. Alibaba Cloud slashed fees for generative AI models by up to 97% in May 2024, one week after ByteDance launched a rival service costing less than most competitors.

The escalation followed predictable patterns. Hours after Alibaba’s announcement, Baidu made its Ernie Speed and Ernie Lite models free. ByteDance’s Doubao series priced flagship models at ¥0.0008 per 1,000 tokens, essentially free.

The economics are brutal.

IDC reported China’s total large model invocations reached 536.7 trillion tokens in H1 2025. At current Doubao pricing, the entire Model-as-a-Service market generates roughly ¥500m-600m ($70m-84m). Alibaba Cloud’s annual revenue exceeds ¥80bn.

Giants justify losses through ecosystem strategy. API pricing serves as customer acquisition cost for broader infrastructure sales. Once enterprises deploy on specific clouds, switching costs create lock-in.

Yet the strategy faces execution challenges. Mid-tier models now trade near zero whilst flagships command premiums that shrink under efficiency competition. Giants must defend both flanks simultaneously—an expensive proposition.

Alibaba uses free small models to retain developers whilst positioning Qwen3-Max for premium customers. Baidu offers free Ernie Lite alongside paid Ernie Bot 4.0 subscriptions at ¥59.9 monthly. Each giant splits resources between volume plays and margin preservation.

The numbers suggest limited success. Alibaba’s models serve over 90,000 corporate clients; Baidu’s Ernie Bot reached 85,000. User counts provide leverage for cloud cross-selling but have not yet translated to model profitability.

Startups suffer worse. Unable to subsidise through cloud revenue, they watch margins evaporate. Zhipu AI charges ¥0.1 per 1,000 tokens for GLM-4; MiniMax’s Abab 6.5 costs ¥0.03. Both exceed DeepSeek but fall far below Western alternatives—yet neither generates sustainable profits.

Two markets emerge from the wreckage

The scale-efficiency collision creates distinct customer segments with incompatible requirements rather than a unified market.

Enterprise premium tier prioritizes absolute performance for high-stakes applications.

Financial institutions modeling risk, pharmaceutical companies analysing molecular interactions, and legal firms processing complex contracts cannot tolerate accuracy degradation. A 2% improvement in prediction accuracy might generate millions in additional value. These customers pay Qwen3-Max premiums willingly.

Developer mass market optimises for cost per successful task rather than peak capability.